Scientists Censoring Science

"Censorship: Its Good for Society. Trust Me. I'm a Scientist!"

Key Points from Our Paper on Scientists Censoring Science

The paper is out and open access. Its not too long, it is a perspective piece, so it has nothing highly technical, and I encourage you to read the whole thing. Here I summarize a few key points, then use the paper as a platform to point out things that did not make the paper.

Key Point 1: Scientists Censor Science

I know, its the title, but many people seem to be blithely unaware that censorship does not only refer to the govt. The First Amendment of the U.S. Constitution (“1A”) only prohibits government from censoring most speech; it has no bearing on non-government entities from corporations to private universities to individuals (state universities are another matter, because they are officially state organs, so the First Amendment does apply to them).

But just because 1A only applies to govt. censorship does not mean other types of censorship do not exist. Other types are all over the place, from corporate nondisclosure agreements to Amazon refusing to list certain books. From the paper:

Censorship is, I believe, especially egregious for institutions tasked with truthfinding, such as the natural, biomedical, and social sciences, and media and news outfits. It is also toxic to discourse around moral and political issues, many of which revolve around opinions and values rather than facts. Social sciences involve all of these types of discourse to some degree, so its particularly bad when censorship rears its ugly head there.

Key Point 2: Rejection for Failing to Meet Conventional Scientific Standards is not Censorship

We did point out in the paper that rejection is not censorship, but, due to space limitations, we did not address it in much depth. However, we did address it in this paper:

We wrote1:

Suppression occurs when the fear of social sanctions prevents ideas from being explored or empirical findings from being presented in scientific or public forums. In science, rejection occurs when an idea has been explored and the evidence has been found wanting. The history of science is replete with rejected ideas, such as a geocentric solar system, young Earth, spontaneous generation of life, and the phlogiston theory of air. These ideas were thoroughly explored and rejected because the evidence available overwhelmingly disconfirmed them.

In contrast, suppression prevents an idea even from being explored. Historically, this has occurred for a wide variety of reasons, including that the idea constitutes religious heresy, political anathema, or premature canonization of the wrong idea.

However, our more recent PNAS paper correctly pointed out some deep ambiguities in how this can work in the real world:

many criteria that influence scientific decision-making, including novelty, interest, “fit”, and even quality are often ambiguous and subjective, which enables scholars to exaggerate flaws or make unreasonable demands to justify rejection of unpalatable findings. Calls for censorship may include claims that the research is inept, false, fringe, or “pseudoscience.” Such claims are sometimes supported with counterevidence, but many scientific conclusions coexist with some counterevidence. Scientific truths are built through the findings of multiple independent teams over time, a laborious process necessitated by the fact that nearly all papers have flaws and limitations. When scholars misattribute their rejection of disfavored conclusions to quality concerns that they do not consistently apply, bias and censorship are masquerading as scientific rejection.

Censorious reviewers may often be unaware when extrascientific concerns affect their scientific evaluations, and even when they are aware, they are unlikely to reveal these motives. Editors, reviewers, and other gatekeepers have vast, mostly unchecked freedom to render any decision provided with plausible justification. Authors have little power to object, even when decisions appear biased or incompetent.

The inherent ambiguities in peer review can also lead scholars whose work warrants rejection to believe erroneously that their work has been censored…The potential for camouflaged censorship by decision-makers and inaccurate charges of censorship by scholars whose work warrants rejection makes identification of censorship challenging.

Key Point 3: Censorship of Science by Scientists is Rising

The Foundation for Individual Rights and Expression (FIRE), maintains a Scholars Under Fire Database, in which they track incidences where scholars are targeted for some type of punishment for speech that is protected by academic freedom or 1A, or, often, both. Typically, this manifests as some sort of formal “investigation,” the endurance of which is punishment enough even if no further sanctions are implemented. This is from the PNAS paper and shows a dramatic spike in the targeting of scholars for punishment over the last 22 years.

Indeed, in a recent essay on this issue, Greg Lukianoff (who heads up FIRE) presented their evidence that there have been more firings of professors for what should have been protected speech than occurred during the McCarthy Era red scare.

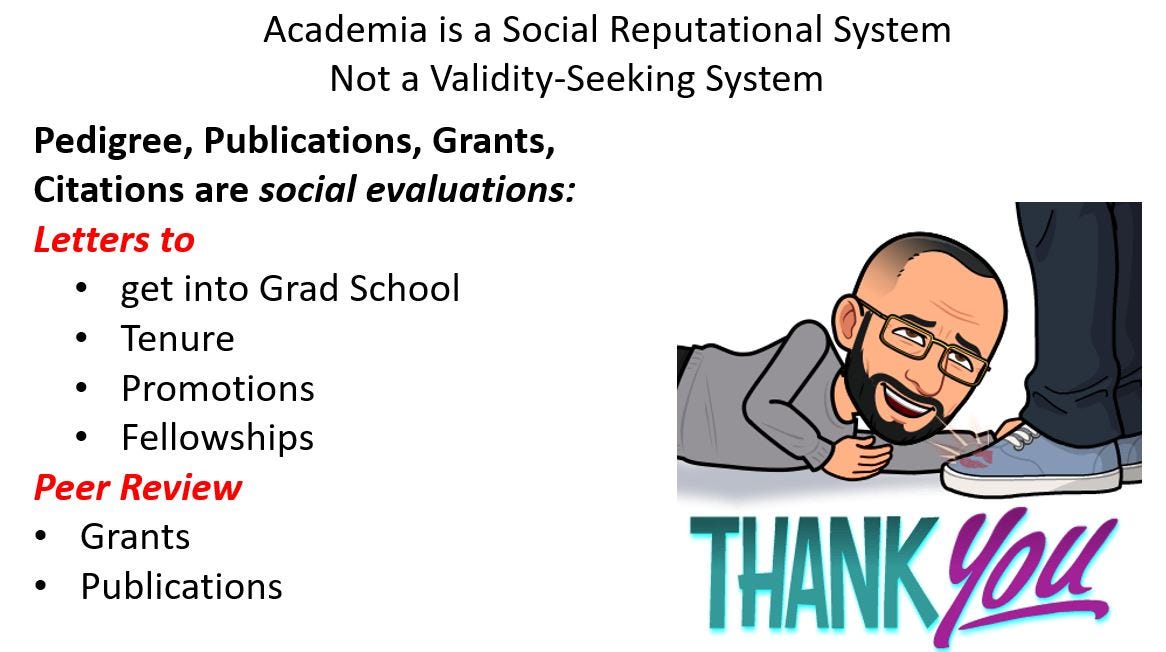

Although it did not make the PNAS paper, we noted in The New Book Burners that most such attacks from the right came from outside of academia, whereas most attacks from the left came from within academia. This is important because, we suspect, academics can usually wear as a badge of courage attacks from the right outside of academia; but attacks from the left within academia are far more career threatening because academia is such a status-based reputational system:

Which gets us to:

Key Point 4: Self-Censorship

People are not usually stupid. When they realize “hey, if I cross the mob, the mob might come for me,” they conclude, “Maybe I’ll just not say stuff that will piss off the mob.” When it is your Uber driver keeping his political opinions to himself because why risk a tip or a low rating, its one thing. When its scientists mobbing scientists for expressions of wrongthink, it can corrupt whole ostensbly “scientific” literatures. Plenty will get the message that “maybe I should not make that claim even if my data support it” or, better yet, “maybe I just won’t study that.”

As we put it in the PNAS paper:

A recent national survey of US faculty at four-year colleges and universities found the following: 1) 4 to 11% had been disciplined or threatened with discipline for teaching or research; 2) 6 to 36% supported soft punishment (condemnation, investigations) for peers who make controversial claims, with higher support among younger, more left-leaning, and female faculty; 3) 34% had been pressured by peers to avoid controversial research; 4) 25% reported being “very” or “extremely” likely to self-censor in academic publications; and 5) 91% reported being at least somewhat likely to self-censor in publications, meetings, presentations, or on social media.

A majority of eminent social psychologists reported that if science discovered a major genetic contribution to sex differences, widespread reporting of this finding would be bad. In a more recent survey, 468 US psychology professors reported that some empirically supported conclusions cannot be mentioned without punishment especially those that unfavorably portray historically disadvantaged groups.

Key Point 5: “Prosocial Motives” Inspire Scientists to Censor Science

This is the most annoying (to me) aspect of this paper. I mean, it is true in a phenomenological sense. People often believe they are doing something good by engaging in censorship. As we put it in the paper:

Censorious scholars often worry that research may be appropriated by malevolent actors to support harmful policies and attitudes. Both scholars and laypersons report that some scholarship is too dangerous to pursue, and much contemporary scientific censorship aims to protect vulnerable groups.

Perceived harmfulness of information increases censoriousness among the public, harm concerns are a central focus of content moderation on social media, and the more people overestimate harmful reactions to science, the more they support scientific censorship. People are especially censorious when they view others as susceptible to potentially harmful information. In some contemporary Western societies, many people object to information that portrays historically disadvantaged groups unfavorably, and academia is increasingly concerned about historically disadvantaged groups. Harm concerns may even cause perceptions of errors where none exist.

Prosocial motives for censorship may explain four observations: 1) widespread public availability of scholarship coupled with expanding definitions of harm has coincided with growing academic censorship; 2) women, who are more harm-averse and more protective of the vulnerable than men, are more censorious; 3) although progressives are often less censorious than conservatives, egalitarian progressives are more censorious of information perceived to threaten historically marginalized groups; and 4) academics in the social sciences and humanities (disciplines especially relevant to humans and social policy) are more censorious and more censored than those in STEM.

So why am I annoyed? Because, duh, just about everyone thinks just about everything they do is for some wonderful purpose. Think I am kidding? As Jacob Mchangama pointed out in his terrific book on the history of free speech, some antebellum jurisdictions criminalized the distribution of abolitionist pamphlets on the grounds that they hurt southerners’ feelings. He also discussed how the Nazis called some of their laws prohibiting political meetings and demonstrations “For the Protection of the German People.”

So, sure, the intellectuals frame arrogating to themselves the right to censor as fulfilling some higher social good. And then they deny that it is censorship. Major red flags are statements of the form “We love academic freedom but…”

Consider Nature’s new ethics policy statements:

Although academic freedom is fundamental, it is not unbounded.

There is a fine balance between academic freedom and the protection of the dignity and rights of individuals and human groups.

The first statement is literally true but refutes a straw argument (is anything unbounded?) and provides no justification for any particular restriction on academic freedom.

The second quote (“There is a fine balance…”) is actually absurd. There is literally no exercise of academic freedom, at least in the sense of publishing something, that could possibly infringe on someone else’s rights. Someone could publish “Joe Shmo deserves the death penalty” and no one’s rights are abridged, not even Joe’s. It really is an absurd statement reflecting a surrealistically superficial understanding of what a “right” is.

And then, Nature doubled down, “explaining” its new policy (hint: Be on the lookout for the Mule of Censorship’s Big Bad “But”[t]s):

Science is the pursuit of knowledge — but the pursuit of knowledge cannot be at all costs.

Ah, yes, there is that big bad but again, again prefacing a straw argument… Our paper actually addressed the only known reasonable conditions under which this might be implemented. From our PNAS paper:

It may be reasonable to consider potential harms before disseminating science that poses a clear and present danger, when harms are extreme, tangible, and scientifically demonstrable, such as scholarship that increases risks of nuclear war, pandemics, or other existential catastrophes.

So, yeah, even we agree that its ok for Nature (or anyone else) to block publication of an article informing teenagers how to make a nuclear bomb in their basement or culture Covid in their kitchen. But beyond that?

Nature continues their “explanations”:

Ethics review is also limited to preventing or minimizing harms that might arise when research is carried out, but not potential harms that can occur when research is published and shared with the world. For example, ethics review does not consider risk of harm that might come about from the way researchers draw conclusions or make policy recommendations based on their research.

Again, this is ridiculous because there is no possible risk of harm from researchers drawing conclusions or making policy recommendations. There is risk of harm from people, including government officials, education bureaucrats, and other policymakers doing stupid or malicious things. If only someone had come up with a system of government that prevents any one individual from getting too much power, and in which the people can hold their officials accountable for actions deemed stupid or malicious.

Nature continues:

Is this guidance about content that might offend?

We draw a distinction between causing harm and giving offence. Something is harmful if it undermines or violates someone’s rights as set out in foundational United Nations human rights treaties.

Again, this is either idiocy or a null set. It is idiocy because academic papers cannot possibly violate anyone’s rights by virtue of anything they have stated in those papers (except for exceedingly narrow cases already covered by law).2 Or, it refers to a null set, because, yes, there is a distinction between harm and offence, but the set of papers that violate rights is zero because nothing stated in a published academic paper can possibly violate anyone’s rights.

I mean, I could make some really gross and offensive statements about people or groups here, aggressive, terrible statements, ones that glorify horrendous violence, involving torture, mutilation, depravity and debasement. No matter what I said, no one’s rights would be violated. Nature is offering pure idiocy dressed up as a deep and moral concern for others’ rights.

But as David Pinsoff put it in his great essay, Morality is not Nice:

What is “morality”? Intellectuals have pondered the question for millennia. As far as I can tell, their answers have converged on something like this:

Morality is about working together to serve the greater good. Our moral compass guides us to resolve our conflicts and treat each other with respect. Without morality, we would lie, steal, and kill. Morality makes us better people.

It’s a nice story. It makes me feel good when I read it. But unfortunately, it’s bullshit.

The truth is, morality is more about deluding ourselves into thinking we’re serving the greater good than serving it. Our moral compass more often guides us to reject compromise and disrespect each other than resolve our conflicts and respect each other. Morality helps us lie, steal, and kill, by giving us a menu of excuses to choose from. Want to lie? Tell a “noble lie”. Want to steal? Take “what you rightly deserve”. Someone has to go? Serve them a cold helping of “justice”. Morality often makes us worse people, by justifying our sadism and fueling our sense of moral superiority.

Morality, in other words, is not nice.

So, sure, scientists frame their motives in pro-social ways. PNAS imposed tight word limits, so there were many things that could not get in there. And one of the most annoying, to me, was that we ended up framing it around “pro-social” motives, which pretty much implicitly accepts the censors’ framing of their ostensibly benevolent motives. They see their motives as benevolent, but the rest of us do not need to see it that way.

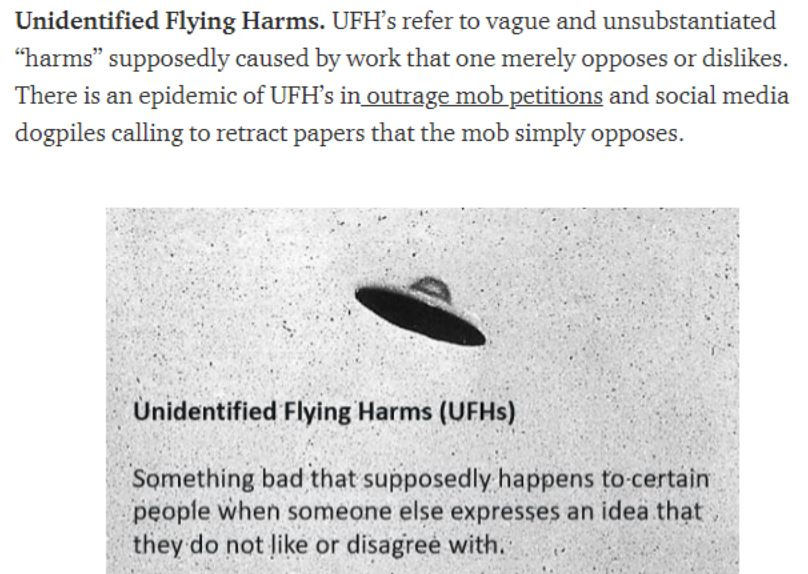

Key Point 6: Where’s the Beef?

Let’s, for the moment, take seriously concerns about publications producing some sort of “harm.” To take it seriously, one would need:

A credible basis (typically scientific) for believing that such a harm has more than an infinitesimal likelihood of occurring.

Some rigorous, quantitative measure of the nature, extent or seriousness of the harm.

Some rigorous evaluation of the net benefits/harms that come from publishing the work versus censoring it.

Neither Nature, nor anyone else, provides this sort of analysis when shrieking “harm!” as a basis for any particular act of censorship. And shriek is a fair characterization. For example, this is from the open letter demanding retraction of Gilley’s (2017) defense of colonialism:

“The sentiments expressed in this article reek of colonial disdain for Indigenous peoples and ignore ongoing colonialism in white settler nations…this article is harmful and poorly executed pseudo-"scholarship" and should be retracted immediately.” Reek it may, but there is not a shred of evidence of “harm” in the entire open letter calling for retraction.

I mean, if all you have are the fears, biases, preferences, values and delusions of academic intellectuals (e.g., “peer reviewers”) then you have no evidence at all. Which is, in fact, the “nature” of the “evidence” that Nature has about harms.

I mean, the costs of censorship are typically more obvious than the benefits. Our paper nails some of this:

There is at least one obvious cost of scientific censorship: the suppression of accurate information.

Scientific censorship may also reduce public trust in science. If censorship appears ideologically motivated or causes science to promote counterproductive interventions and policies, the public may reject scientific institutions and findings.

There is good evidence for that reduced public trust, some of which is reviewed in our paper. I’ve presented this before, but this graphic from the paper by one of our collaborators, philosopher Hrishikesh Joshi, is a thing of beauty. It shows how censorship can corrupt an entire area of scientific publishing:

Worse, inasmuch as people tend to exaggerate harms and underestimate benefits from publishing controversial or even offensive findings, there is no reason to have any confidence in the entirely subjective and data-free judgments of editors or peer reviewers. So, even taking seriously the potential problem for “harm,” the threshold to justify blocking publication would need to be very strong, to exceed the known harms from censorship itself. The Nature editorials were entirely oblivious to this point.

Conclusion

One of the limitations of our paper is that we presented no concrete examples, so it is all very abstract. One type of example of scientists censoring science is forced retractions of articles that fail to meet C.O.P.E. guidelines for retraction:

So if a paper is found to have massive data error or fraud, it should be retracted. That is not censorship. What this means, however, is that papers without data, such as reviews, narrative pieces, opinion or perspective articles, or theory papers, should never be retracted unless, e.g., they fabricate quotes or cite articles that do not exist. So here are some examples you can track down, if the spirit moves you. In none of these cases did anyone even accuse any author of data fraud or massive error:

Or, if you prefer, you can read about several cases in The New Book Burners, recently posted here at Unsafe Science.

I’ve posted this before, too, but it bears repeating. The Fiedler on the Roof/Nonexistent Racist Mule/PoPS Fiasco raised attempted book burning to a whole new level — it remains the only case I know of in which an academic outrage mob sought the retraction of, not a single paper, but an entire set of papers and commentaries (as most of you know, one of those papers is mine).

By virtue of constituting an attempt at Maoist-style repression (plus re-education and confiscation of private property), it reflected the depraved nature of the regressive ideological “norms3” and values now disturbingly widespread in academia. And if you have any doubt about that depravity, go here, here, or here.

Epilogue

Several of my collaborators on this paper have posted their own essays with additional perspective, often building on the core ideas:

Free Speech Advocates are often Hypocrites: This Doesn't Make the Cause Less Important, at the online magazine, Reason. By Musa al-Gharbi, who is pretty much always brilliant. Excerpt:

The appropriate response to selective concern in one direction isn't selective concern in the other direction. That's a recipe for keeping anyone from enjoying a free atmosphere.

More broadly, it's an error to understand the interests of historically disadvantaged and historically dominant groups to be diametrically opposed. When people from historically privileged groups are facing censorship, that doesn't mean people in historically marginalized groups are actually being empowered.

Indeed, although censorial tendencies are frequently justified by the desire to protect vulnerable and underrepresented populations from offensive or hateful speech, speech restrictions generally end up enhancing the position of the already powerful at the expense of the genuinely marginalized and disadvantaged.

Science has a Censorship Problem: The Motives are Benign; the Effects are Insidious. Inside Higher Education. By PNAS paper lead author Cory Clark and Musa al-Gharbi.

Excerpts:

Editors are granting themselves vast leeway to censor high-quality research that offends their own moral sensibilities, or those of their most sensitive readers.

Moral motives have long influenced scientific decision-making. What’s new is that journals are now explicitly endorsing moral concerns as legitimate reasons to suppress science.

There is No Way I am Publishing That. Psychology Today, by Glenn Geher. Excerpts:

In a recent study of self-censorship within the academy, a full 91% of researchers reported that they were at least somewhat likely to self-censor when it came to presenting academic ideas across a broad array of contexts (see Honeycutt et al., 2022).

As described above, such self-censorship is, interestingly, motivated out of prosocial reasons. This said, from the perspective of a pure academic, as we point out in our paper (Clark et al., 2023), such self-censorship is, especially in the long-term, quite problematic. It has the often-unwitting effect of stifling or even covering up the truth.

And if we, as behavioral scientists, are interested in knowing what humans are truly like—in what truly makes us tick—censoring significant findings and topics that bear on the broader human experience has the potential to stifle our understanding of who we are.

A good short summary of the PNAS paper by collaborator Steven Stewart-Williams can be found on his excellent Substack.

And Jerry Coyne, who was not a collaborator on this paper, but was on the defense of merit paper, and who hosts the fabulous blog WhyEvolutionisTrue, had this essay:

Censorship in Science. He has some pretty good criticisms of the paper as well, some of which I tried to address here (such as the paper not having concrete examples to clarify exactly what was under discussion).

Footnotes

For readability, I have deleted citations from all quotes, but you can find them in the main papers.

Defamation and libel are exceptions but this is still either a null set in the real world or a null set for all practical purposes (even if you can find an exception or tw), because academic papers are not in the business of leveling accusations against living individuals. Nature is not about to publish a paper in which Professor X declares that “Fred [who has never been convicted of any crime] is an extortionist.” Plagiarism would be another exception. So in some sense, academic papers can violate rights, but not because of their ideas, claims, or conclusions, which is the point of this essay.

Kurt Gray, one of the signatories to the Maoist Open Letter summarized here, made a point of defending doing so at a conference (organized by Joe Forgas on [amusingly & ironically] the psychology of tribalism), on the grounds that Fiedler violated “publishing norms.” As with Nature and harms, these were Unidentified Flying Norms in the sense that he never stated what norms were violated. You can find out more about Gray in my essay, Meet the New Book Burners, which goes through his and others’ credentials and gross hypocrisy.

This is excellent stuff, which anyone interested in academic freedom ought to read. Censorship comes too often from the point of view of deciding 'what is moral'. But no one becomes morally enlightened by gaining a higher degree in anything. Sometimes, it might be quite the reverse. Science is about the pursuit of truth, and sometimes the truth just isn't nice.

"Unidentified flying harms" - Black magic. Sorcery. It's the witch-hunt all over again.