Reviewing Nature's Reviews, Part II

They Told us We Should Not Try to Replicate Moss-Racusin et al (2012)

Summary Up Front

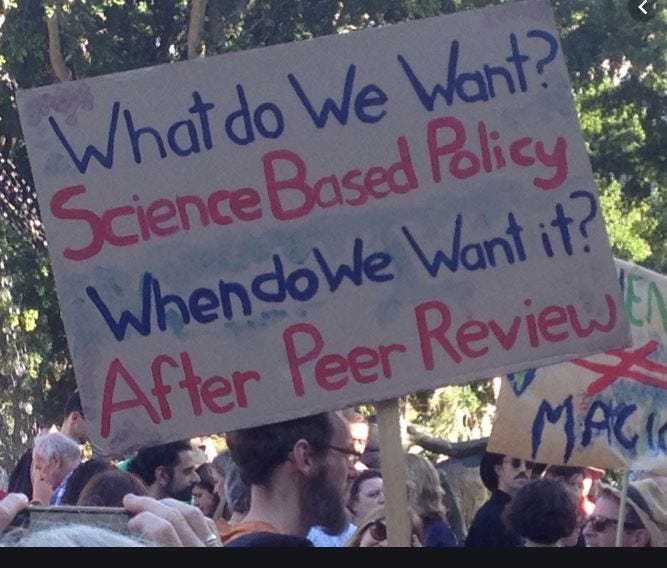

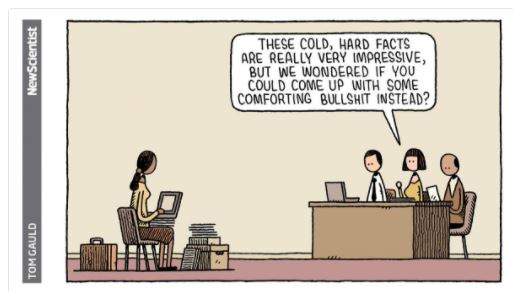

In this essay, I highlight how three of the reviews (from Nature, in 2019, which rejected it) of our proposal to replicate a famous study all argued that we should not do so, a profoundly antiscientific attitude towards … science.

Background, in Brief

Our reversal of the famous and massively influential Moss-Racusin et al. (2012) study (hence “M-R”) finding biases in academic science hiring favoring men is now in press. My first essay on this includes a link to M-R and to both our full report and supplementary materials. Using methods nearly identical to those they used in that study of 127 faculty, we conducted 3 studies, with over 1100 faculty, and found biases against men, not women.

In my prior essay, I pointed out that we initially submitted the proposal for a registered replication report to Nature, which rejected it on, as per one reviewer, the manifestly false grounds that the study could not be replicated. That essay explained why such grounds are ridiculous, described the steps we took to empirically test the reviewers’ analysis, and described the results resoundingly disconfirming the reviewers’ analysis.

In this essay, I review the other reviewers’ criticisms, which were not that the study could not be replicated, but that it should not be replicated.

You Should Not Replicate Moss-Racusin et al. (2012)

Reviewer 2:

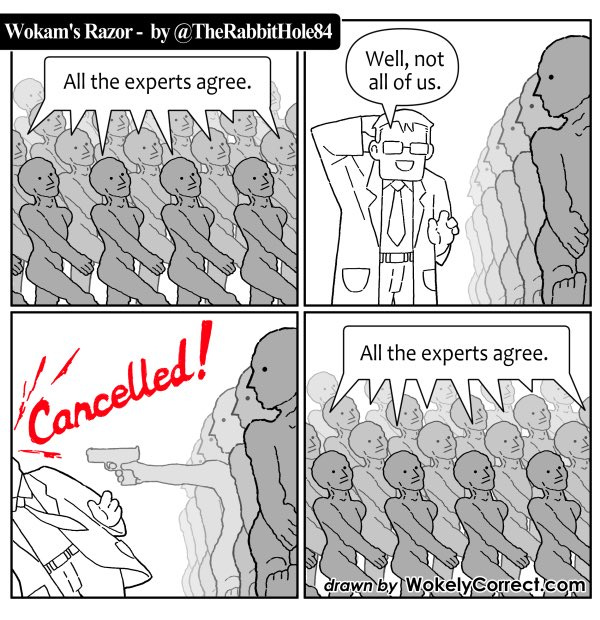

In my view, the Moss-Racusin et al. paper was significant because of its valuable contribution and also because it appeared in PNAS, was edited by Shirley Tilghman, and undertaken by an interdisciplinary team of social and natural scientists. The proposed replication of this study would also need to replicate these features… [emphasis added by me for this post, Lee].

Replicate the editor?

Replicate the interdisciplinary team? The study was straight up social psychology, there was no chemistry, biology, physics, paleontology, geology in it at all. It was virtually guaranteed to be better than the original, which it was, by virtue of including two social psychologists (Lewis and me) active in the psychological science reform movement dedicated to improving on the bad old days that produced the Replication Crisis. And just in case you missed it in the original post, this table compares the methodological quality and rigor of M-R versus our replications:

Regardless, the entire point of replication is to determine whether a published report of some result or phenomenon is credible. Do different researchers using the same methods get the same results? Replicating the disciplinary background of the original team is not merely irrelevant, its ridiculous.

Reviewer 2 continued:

1. The first design flaw is extremely serious. Gender bias is measured by reaction to the names “John” and “Jennifer.” These names are ethnically specific. Gender cannot be measured apart from intersecting social features. If this paper retains this aspect of the design, the best claim it can make is that STEM faculty are biased against white female applicants.

I am not going to bother to address this on the merits, because they are irrelevant. The entire point of replication is to test whether different researchers get the same results using the same methods. A study testing the same hypothesis using different methods (e.g., varying race/ethnicity of names) might be interesting, but, then, it would not be a close replication. Translation: M-R should not be replicated.

Reviewer 3

R3 looovvveesss replication:

I am, of course positively disposed to replication. It is a cornerstone of our science.

But not in this case:

Should the study [M-R] be replicated – sure. Did the authors make a compelling case? I was not fully convinced. Though I favor replication of this (and just about any study), I’m not favorably disposed to the current registered report (i.e., I do not think a positive decision is warranted given the current presentation of the authors’ case).

Why not, pray tell?

The setup of the paper does not really provide adequate justification for conducting the study.

Here is sufficient rationale to conduct the replication, and it was in the proposal: M-R was massively influential. It was a small study. That alone should be sufficient. The End.

However, it was not The End (of our proposal). We also pointed out, correctly, that studies of sex bias in academic science hiring had produced contradictory results. All the more reason to see if a founational study (to claims about pervasive gender bias in STEM) replicates. The End.

That really is all the intro we needed, though of course there was more. How did the reviewer respond?

First, the way the case is laid out, the reader expected the authors to propose research that would help explain or reconcile these seemingly conflicting results.

That would not be a replication. Again this is a variant of “They should not perform a replication.” “I love replication, but … no.”

Reviewer 3 continued:

In addition to replication, the author's main argument for extension is that it is necessary to evaluate whether the results from Moss-Racusin et al. (2012) generalize across STEM fields, or in other words, to technology, engineering, and mathematics. However, the authors provide no theoretical argument for why one might think gender bias would be the same or different in the “S” vs. “TEM” fields. Whether the authors think gender bias would be similar or different in the various fields, they need to justify their argument from a theoretical standpoint.

No, we don’t and did not. The rationale is practical: Everyone and all their friends and relations routinely cited the study as evidence of sex bias in STEM, even though M-R only focused on S(cience, not tech, engineering or math). We simply asked the empirical question, “do the biases M-R found generalize to TEM?” In the actual studies, not only did they not generalize, we got the same pattern of biases against men in all the STEM fields we studied. Theory can go fuck itself.

Back to Reviewer 3:

the proposed extension to evaluate the generalizability to very related fields is not a satisfying extension. Rather than direct replication and an extension that is not strongly supported by a theoretical perspective, it would be more interesting for the authors to add an extension to evaluate mechanisms or to utilize a similar paradigm in a different context (e.g., grading of assignments, hiring of assistant professors, etc.).

Please excuse me for stating the obvious. Those would be different outcome variables. However useful it might be to conduct a different study with different outcome variables, that would not be a replication. Translation: “M-R should not be replicated.”

Reviewer 4:

A key limitation of the original study, and inherited by the planned replication, is that the key ‘gender’ manipulation involves only a single item (i.e., John/Jennifer). This vastly limits generalizability – and makes both studies vulnerable to the possibility that the results can be explained by an artefact i.e., some idiosyncratic feature(s) of this name pair, unrelated to gender, is contributing to the observed outcomes (e.g., John/Jennifer could be a more common name and the outcome is thus influenced by familiarity bias as well as, or instead of, gender bias). To address this, it might be worth considering using a number of different name pairs…

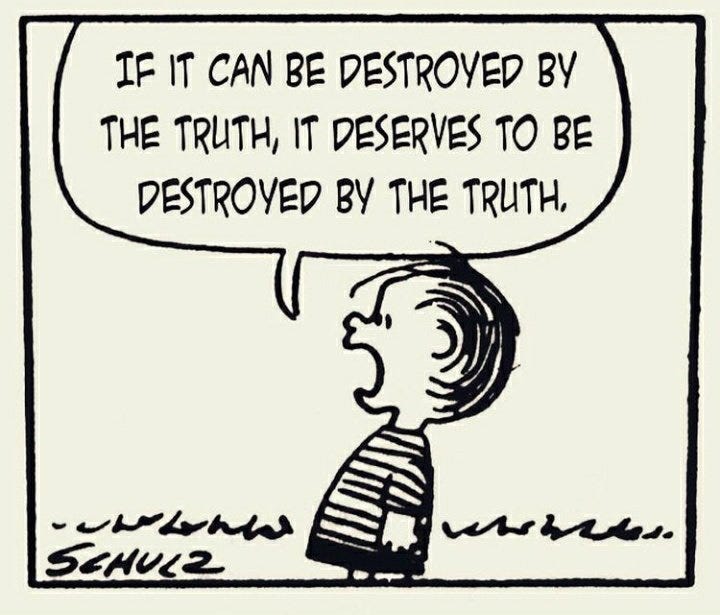

So what you are really saying is, “Given the limited generalizability of M-R, maybe the WHOLE FUCKING WORLD OF ACADEMIA SHOULD NOT HAVE BEEN MASSIVELY CITING IT AS EVIDENCE OF BIAS AGAINST WOMEN IN STEM.” That is what you are saying, right? Right? RIGHT?

Had we used a medley of different names, the studies would not have been a close replication of M-R. Translation: M-R should not be replicated.

Back to Reviewer 4:

I would argue that this question of ‘Did it replicate?’ is not actually that informative.

So we shouldn’t conduct one?

I think the underlying motivation for asking this question, is that if we cannot obtain a finding similar to the original study when we attempt to closely repeat its methods, then perhaps we should discount that original finding.

Dear R4, this is exactly correct. You should have stopped here and recognized the value of doing so. This is the entire point of replication. Unfortunately, you continued:

However, in the present context we are dealing with stochastic phenomena and replications cannot be exact.

As long as “exact” means, “the replication cannot be conducted when Moss-Racusin et al. (2012) conducted their study,” this statement is literally correct. Otherwise, methods as identical as we could discern from their report could be and were used.

Thus, there can be multiple reasons why a replication study will obtain a different finding to an original study, and only some of those reasons (e.g. fraud, error, or serious undetected confound) would lead us to discount the original finding.

Perhaps your scientific epistemology, dearest reviewer, is that “only fraud, error or undetected confounds” can explain replication failures. If so, it is an abysmal epistemology, because there are many other reasons a study may not replicate that do, in fact, raise credibility questions about the original finding:

false positives. Small studies, such as M-R, often produce “statistically significant” results even though there is no real effect.

selective reporting. How many such studies finding no biases or biases against men went unreported?

publication bias. The published literature may be biased against the reporting of nonsignificant results and in favor of findings supporting “social justice narratives” such as the supposed existence of pervasive biases against women. If so, the published literature may be a distortion of reality, whereby the literature is filled with studies of biases against women even though the world was not. This indeed seems to have been the case.

Reviewer 4 continued:

I would suggest (and the authors should certainly correct me if I’m wrong!) that fundamentally the authors are interested in the research question of whether STEM Faculty Biased Against Female Applicants, and the “Did it replicate?” question is not relevant.

Bingo! “Did it replicate? is not relevant”???!!!???!!! Translation: Who cares if the study replicates?

Reviewer 4:

To address the research question, one would need to consider all of the relevant evidence (quantitatively, the effects could be combined in a meta-analysis).

So, instead of performing the replication, we should have performed a meta-analysis? Translation: M-R should not be replicated.

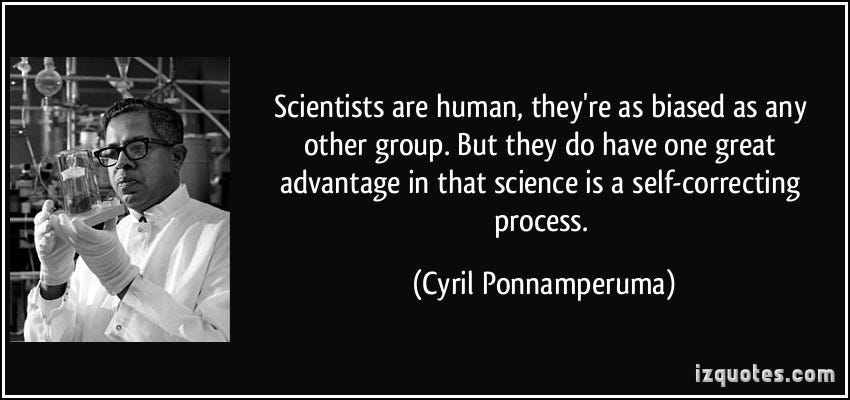

Dressed up in these sophisticated sounding critiques, all four reviewers expressed profoundly unscientific, even anti-scientific, views (Reviewer 1 implied that M-R could not be replicated). The next time you hear scientists pontificating about how “science deserves its credibility because, when it gets things wrong, it is self-correcting” remember these reviews from Nature, one of the most prestigious of all “scientific” journals. Replication is the most direct route to scientific self-correction. And they told us we should not do it.

Related Prior Posts

Commenting

Before commenting, please review my commenting guidelines. They will prevent your comments from being deleted. Here are the core ideas:

Don’t attack or insult the author or other commenters.

Stay relevant to the post.

Keep it short.

Do not dominate a comment thread.

Do not mindread, its a loser’s game.

Don’t tell me how to run Unsafe Science or what to post. (Guest essays are welcome and inquiries about doing one should be submitted by email).

As a scientist I am appalled by the statements of the reviewers.

I wish I could say I was surprised by those reviews and not merely disgusted. I can only assume those sorts of reviews are what happens when schools push an ethos of activism over truth. Thank you for sharing them, if only because people need to see how anti-science academic science publishing has become, and view it as a method of manufacturing evidence towards a desired narrative as opposed to anything approaching the truth.