Adversarial Collaborations

An exchange about the (de)merits of recommendations in our recent paper

This is a collaborative post involving Cory Clark, Chris Ferguson and me on the merits of and obstacles to adversarial collaborations, which refers to researchers who have very different views about some phenomena, and often have publicly staked out very different positions, working together to empirically resolve some, or maybe all, of those differences.

Cory Clark is a Visiting Scholar at University of Pennsylvania. Along with Professor Philip Tetlock, she co-founded the Adversarial Collaboration Project, where she helps disputant scholars resolve their scientific disagreements--and ideally, become friends.

Chris Ferguson is a professor of psychology at Stetson University in Florida. He has written numerous articles on video game violence, social media, race and policing and other moral panics. He has also published several non-fiction and fiction books for the general public including his recently released YA gothic horror novel The Secrets of Grimoire Manor. He lives in Orlando with his wife and son.

Cory posted on X a twitter thread summarizing our just-out article advocating for more adversarial collaborations to attempt to resolve seemingly intractable scientific and political controversies in the the published psychology literature (the principles extent well beyond psychology):

Nonetheless, from the paper:

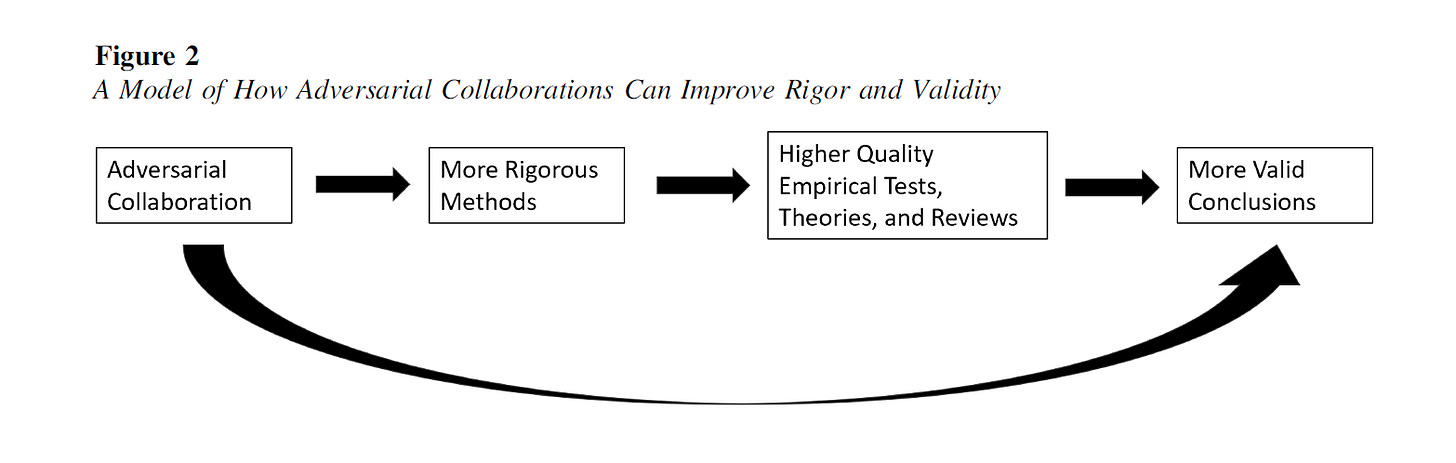

The idea is that adversaries can hold each others’ feet to the fire to ensure that high quality, high rigor methods are used to provide the strongest possible methods to falsify each other's pet theories and hypotheses. As usual, although there is no guarnatee, a claim that passes such “severe tests” should usually be more credible than claims subjected to softball tests built on researchers’ preferences or unintentional biases in favor of their work.

From Cory Clark’s X thread:

In 2003, Kahneman published an article in American Psychologist describing the magic of collaborating with Tversky but also lamenting how current modes of scientific disagreement were unnecessarily hostile and unproductive. He hoped for a better way: Adversarial Collaborations.

She highlighted this quote:

One of the lessons I have learned from a long career is that controversy is a waste of effort. I take some pride in the fact that there is not one item in my bibliography that was written as an attack on someone else’s work, and I am convinced that the time I spent on a few occasions in reply–rejoinder exercises would have been better spent doing something else. Both as a participant and as a reader, I have been appalled by the absurdly competitive and adversarial nature of these exchanges, in which hardly anyone ever admits an error or acknowledges learning anything from the other. Doing angry science is a demeaning experience—I have always felt diminished by the sense of losing my objectivity when in point-scoring mode

Lee here: I cannot say how much I disagree with Kahneman here. The best way to correct the record is to … attempt to correct the record.

One might reply, “Well, if its wrong, just do a study showing its wrong!” Sometimes, that works, sometimes it does not. Here is one of my favorite examples of both:

Points 1 and 2 were, eventually, debunked by research showing that, in fact, conservatives and liberals are about equally biased and that, conservatives are generally happier than liberals. I do not know whether empirical research can show that people do or do not “rationalize” things; generally, it is far easier to show what people do and do not believe than how they arrived at their beliefs. I suppose one might be able to do some sort of cognitive dissonance studies1 to examine whether conservatives are more prone to rationalizing anything, including inequality, but, as far as I know, it has not been done. Instead, the work Jost et al reviewed that served at the basis for the “rationalize inequality” claim was simply that conservsatives were more accepting of inequality. This is no evidence of “rationalization” whatsoever, and saying so seems both appropriate and important.

And 4? There is no “study” that can be performed to “show” that Stalin and Castro were not only not conservatives, but far left extremists (that is kinda inherent to any sensible understanding of communism). They argued that Stalin, Castro (and, presumably, Mao, Pol Pot and the whole gallery of mass murdering communist dictators) could be considered conservatives because they gained power and tried to hold it, meaning that they were “preserving the status quo.” But, by this definition, any leftist whoever gains power and tries to hold it instantaneously becomes “conservative” which is about as whackadoodle as you can get. No studies needed, just a modicum of common sense.

Back to Cory’s thread. Then Cory presented this from Kahneman’s paper:

My hope is that these and other variants of adversarial collaboration may eventually become standard. This is not a mere fantasy: It would be easy for journal editors to require critics of the published work of others—and the targets of such critiques—to make a good-faith effort to explore differences constructively. I believe that the establishment of such procedures would contribute to an enterprise that more closely approximates the ideal of science as a cumulative social product.

Lee: I totally agree with this. Back to Cory’s thread:

21 years later, these procedures remain rare. Ceci, Williams, @PsychRabble (that’s me on X! Lee) and I just published an article explaining why ACs (adversarial collaborations) are needed to improve scientific outputs and restore credibility to behavioral science:

We include a list of 60 debates that would benefit from ACs (adapted from an earlier list made with Phil Tetlock. But this is a drop in the bucket of potentially millions of published papers that contradict other published papers.

Cory continued:

And some scientists can and will do them. Tetlock & I have been working with dozens of high integrity scholars at @AdCollabProject. These projects are hard, and we are still learning best practices, but they can be done. Cory then directed anyone interested in understanding how to do so here:

Cory’s thread continued:

To expedite adoption of ACs, those who control incentives in science (publishers, employers, editors, funders, etc.) should reward and encourage ACs from scholars who publish conclusions that contradict other conclusions.

My hope is that over time, ACs could change the culture of science to one where scientific disagreements are resolved faster and more cordially, and belief updating is embraced as a normal and desirable part of the scientific process rather than an embarrassing career blunder.

Enter Chris Ferguson, discussing why … maybe not so fast.

It’s good and largely agree. I think it requires some kind of larger cultural change though. As I responded to Cory, in many of these areas scholars have often worked hard to destroy each other. The idea they’d then want to blow several years of precious life with the constant stress of working together is fantasy I think. You could “force” them, but I’m not sure that’s quite right.

That leaves me with two thoughts. First, having done a couple of these, they seem to work best when involving people who like each other, but have different views on a topic but are legitimately curious if they are right and think it’s cool if they aren’t rather than being in some kind of position to defend their honor. Second much of the culture in academic publishing right now involves the fear of humiliation. Comment/replies are often like this…hell the most fun ones to read are like this. I suspect that both reflects human nature but also what literally “sells” (And yes I’ve had editors largely confirm this). You’d have to create a science culture that gives people much more freedom to be wrong, have null results, etc. I’d love it! But I’m not sure how likely it is. So the ideas are right on, but it can seem a bit like arguing humans should stop fighting wars.

What do you think? Am I too pessimistic?

I have no idea who this is, but his X handle says he is an anthropologist. Note that his handle is at Blue Sky, which is where many academics retreated in stark terror when Must took over Twitter (I am not saying this is the case here, just saying that this was common):

Lee here: I agree with Hames, but Chris’s reply was interesting:

Ideally, yes. Albeit it would require a significant cultural change, and a lot of change in incentives in academic publishing. And a lot less focus on moral/policy implications of social science. Why would someone collaborate with another person who is morally wrong if that collaboration would result in the "wrong" policy, etc. TBC, that's not my perspective. I just think...for literal decades everyone has known we shouldn't focus on p-values but rather a conservative read of effect sizes. But the degree to which this has changed procedure in the field or made it less likely individual scholars would exaggerate the significance of their results (or acknowledge the likelihood those results are noise) has been, I think, between 1-5%? So I'm pessimistic about stuff at the top of my wish list too. Social science is largely garbage, and making it not garbage would require huge cultural changes to the way we do things (and TBC @PsychRabble and @ImHardcory (this is Cory Clark’s X account) are among the good guys).

Ok, back to me.

I replied to Chris with this:

Please forgive the typo; “prescriptive” should have read “prescriptively.”2 In short, I think we are all mostly correct here. My point is that Cory, my collaborators, and I are making a prescriptive argument, an argument for how things should be. Chris, in contrast, is arguing something like “maybe so, but back in the real world, there is usually so much hostility between those in opposing camps that adversarial collaborations are likely to be few and far between and our call for more will go (mostly) uanswered.

He is probably (mostly) right.

But the fact that he is (mostly) right is an argument for practical difficulties, not an argument against ACs in principle, which he also clearly endorses, when possible. I have no idea whether our (and others’) call for ACs will have any influence on anyone’s behavior — but you never know when advocating for this sort of culture change will end up in a totally useless dead end, or actually change at least some people’s behavior. Many improvements to the psychological science practices (such as open data and materials, pre-registration and registered reports (I briefly explain these later in this essay), larger samples, reporting of effect sizes along with p-values, etc.) started with reformers screeching into the abyss for years or even decades. And then, all of a sudden, many of these reforms have become far more common and, in some cases, normative. You bang your head against the wall, over and over, and mostly you get a headache without the slightest shred of evidence that the wall is collapsing. But sometimes, not often, but once in a while, you bang your head one more time and the wall comes crashing down.

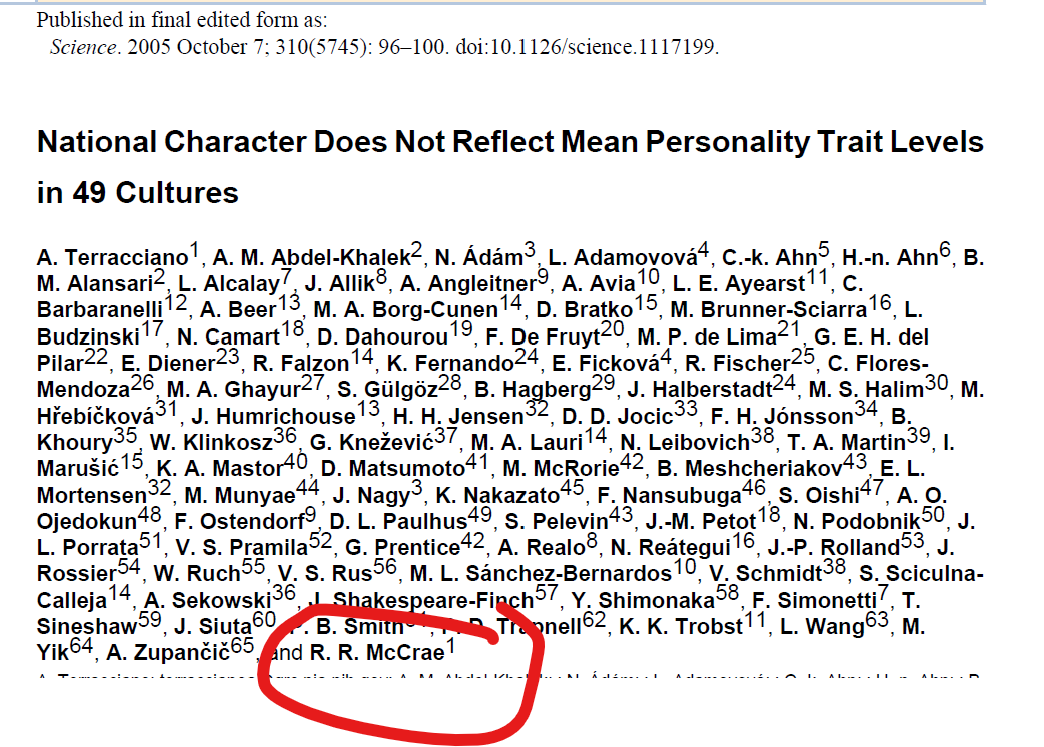

I have engaged in several adversarial collaborations in my career, including one that took place before the term became widely used. They have all been terrific. In 2005, a team lead by R.R. McCrae (senior author, listed last) published this article finding complete inaccuracy in national stereotypes.3

Now, by this time, I had published a slew of findings of surprisingly high levels of accuracy in social stereotypes, such as some that appeared in this 1994 book:

Much to my surprise, some time in the mid 2000s, McCrae contacted me inviting me to collaborate on further work he was leading up assessing the accuracy of stereotypes. I loved this idea, and, by virtue of: 1. The mere fact that he invited me it strongly suggested that we would not have the type of intractable hostility that Chris was (in many cases justifiably) concered about; and 2. I knew he was a hardcore quant/data type and saw no evidence of him grinding political axes in any of his (extensive) prior work. I guess I should add this too: 3. My claims were always about the accuracy of particular stereotypes examined in particular studies and never assumed stereotypes were always or by necessity accurate. Accuracy is, in any particular case (any particular person’s or sample’s particular beliefs about one or more specific groups) an empirical question. So I jumped in whole hog and this led to three papers, two finding high accuracy in sex and age stereotypes and one replicating his finding of almost complete inaccuracy in national stereotypes.4

I also have an adversarial collaboration in progress. In 2012, this team produced a paper finding something conducive to widespread academic beliefs about sexist biases in the academy. Its been cited thousands of times and the lead author got invited to Obama’s White House.

Its a pretty small study and has never been replicated. Until now.

This paper is in progress. It is a registered replication report, meaning that, before collecting data, we wrote up a report describing exactly how we were going to conduct the studies (3! not just one as in the original paper), including much larger sample sizes, and submitted it to journals. And we followed their methods almost to the letter (not counting a few times where they were not clear about what they actually did). After being rejected by PNAS (the journal Moss-Racusin published in; talk about dysfunctions in academic norms…), it was given a provisional acceptance at the journal Meta-Psychology. Although they won’t make a final decision until we send in the completed paper, unless they judge we screwed up bigtime, they will publish it.

In addition, this was an adversarial collaboration. Nate and I thought the original was unlikely to replicate, and if it did, it would be with much smaller bias effect sizes. Neil and Akeela thought it more likely to replicate but probably with smaller effect sizes.

I am not going into all the details here until the paper is accepted, but let’s just say we found the exact opposite of what the original found — STEM faculty were biased against male, not female, job applicants.

As Cory, my collaborators, and I wrote in our recent paper advocating for ACs (note, in this excerpt, RR refers to registered reports (original research is accepted by a journal based on the proposal, not the findings) and RRR refers to registered replication reports (like our replication of the Moss-Racusin et al study):

However, RRs and RRRs work well with ACs. ACs can help ensure that RRs and RRRs truly are the fairest and most rigorous tests of the hypotheses they purport to test. And RRs or RRRs help lock adversaries into an explicit research plan to avoid post hoc quibbling about a priori predictions. RRRs are almost inevitably adversarial in that they challenge an earlier finding, so AC approaches would almost always be appropriate for RRRs (assuming advocates of the findings in question are still active researchers). And given the risks of interpersonal conflict in ACs, ACs would almost always benefit from RRs. In other words, we see these approaches as mutually beneficial to each other.

When it comes out, I doubt it will change the minds of the many true-believers in sexist biases in STEM. In an upcoming post, I will present in some detail the evidence that academics publishing in the journals mostly ignore failed replications (although this is not merely a failed replication, it is a complete reversal). You keep banging your head against the wall and, mostly, you just get a headache. But once in a while, eventually… you never know.5

Footnotes

Cognitive dissonance refers to a venerable line of research, generally finding that, if you can get people to act in some way that violates their cherished beliefs and attitudes, or self-interest, they often change their attitudes to get closer in line with their behavior. However, cognitive dissonance was also an early unnamed “replication crisis” because lots of people had trouble finding these effects. These issues are beyond the scope of the present essay.

“tl;dr” is short for “too long, didn’t read” and is a very short upshot of a much longer analysis. That longer analysis is this essay.

When the 2005 article on inaccuracy in national stereotypes came out, Mahzarin Banaji, who was then President of the Association for Psychological Science, sent an email to the whole society alerting us to this “important” (her word) research.

Banaji did not send any emails alerting anyone to the work finding accuracy of gender or age stereotypes.

For reasons I do not understand, Substack linked to the WRONG Chris Ferguson’s Substack. THIS Chris Ferguson can be found at:

Rethinking science

Sciencing the rigged and corrupt scientific system for an overdue turnaround

Unless we change it, we’re doomed to the next PLANdemic. And yet, nothing has changed, only got worse!

https://scientificprogress.substack.com/p/rethinking-science

Cool idea - thanks for sharing.